Artificial intelligence has integrated itself into society and is developing by the minute, but when it comes to the technology and its capabilities, there are certain things people should look out for.

Society must especially understand how to recognize the dangerous misuse of AI to prevent misunderstandings. Specifically, they should stay educated on its abilities, recognize false information created by it and learn how to use it correctly.

Utilizing AI properly is crucial. One way college students can do so is by taking courses that discuss the uses of artificial intelligence, both good and bad.

Artificial intelligence can be found in programs such as ChatGPT and Adobe’s new generative fill feature that debuted this year. At its core, AI combines computer science with datasets to problem solve and simulate human performance.

But society's dependence on the technology grows as AI's capabilities become more advanced.

In a study conducted by Pew Research Center, 30% of U.S workers said that over the next 20 years, the use of artificial intelligence in the workplace will equally help and hurt them. Similarly, in a 2022 Pew Research study, 19% of American workers were in jobs in which the most important activities may be either replaced or assisted by AI.

It is imperative that college students take the capabilities of AI into account, as they could soon be entering a workforce that utilizes the technology. They should be taking the special focus course and educating themselves on the technology in order to better adapt when it inevitably becomes integrated into their work.

The University of South Carolina offers a certificate in artificial intelligence as well as a new special focus course that will delve into the ethics of AI.

Jason Porter, a professor in the School of Journalism and Mass Communications, has examined the capabilities of interactive technology.

“It is a new tool that is out there, that is being used," Porter said. "If you don’t embrace it and don’t learn with it, you will be left behind.”

However, AI isn’t made to run on its own, despite what science fiction movies such as "Terminator" suggest. It is a tool that users must learn to make their own by managing it.

AI's presence might be unavoidable, but 52% of U.S adults are already more concerned than excited about the role artificial intelligence will play in everyday life, according to a study conducted by Pew Research Center.

The darker side of AI needs to be addressed, too.

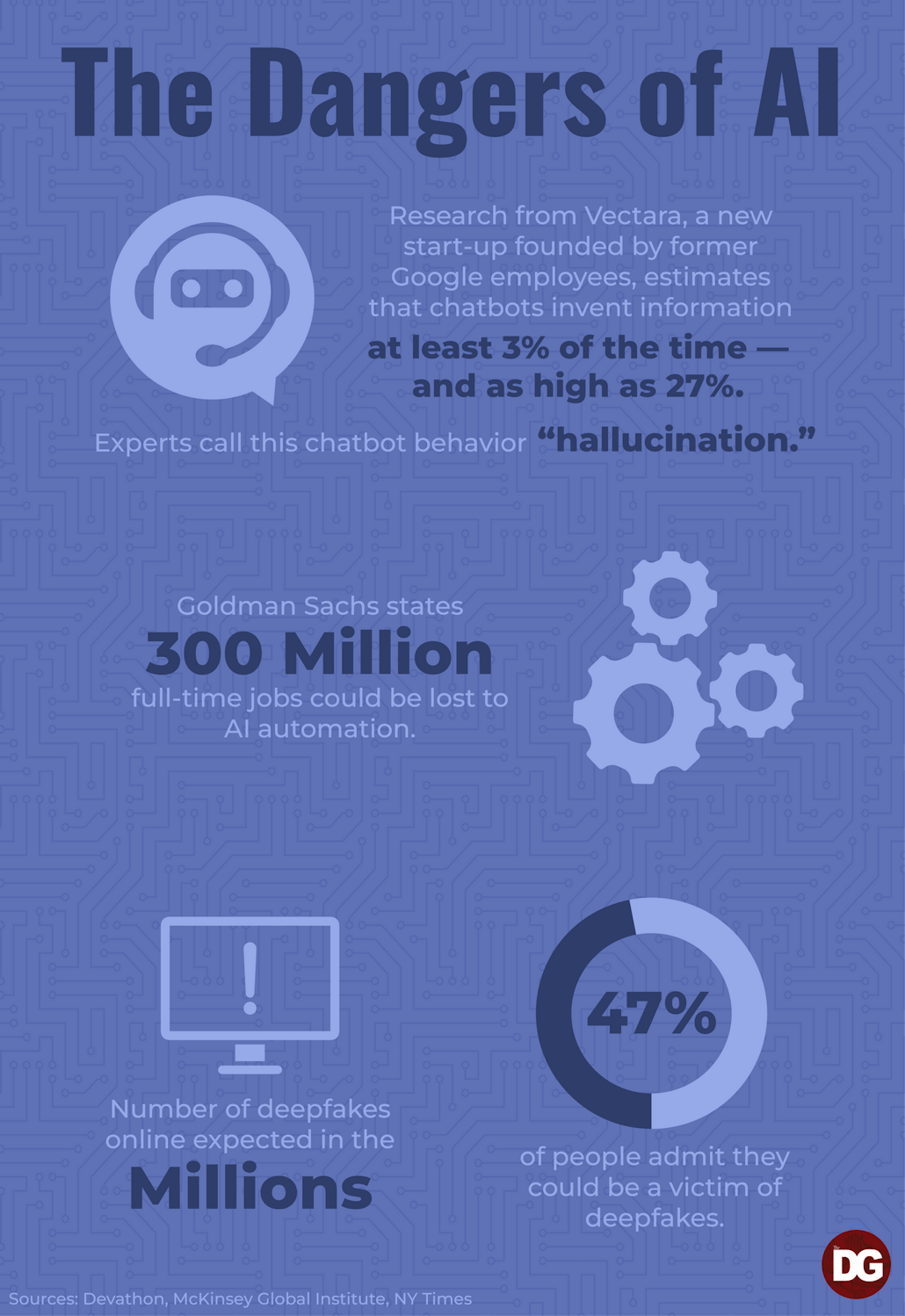

Behind the walls of useful ChatGPT applications and popular social media filters lay deepfakes, or digitally altered content that is manipulated into appearing as someone's likeliness. There are now deepfakes of celebrities and mind-bending recreations of human portraits — and they are everywhere, according to BuzzFeed News.

An article from the New York Times explores the dangerous capabilities of AI as well as its contributions to disinformation — something society is already susceptible to. The technology has been used to create pictures of former UK Prime Minister Boris Johnson dancing among a crowded street as well as ones that depict a staged moon landing, contributing to the already popular conspiracy theory.

Society is at risk of falling for these deepfake traps and accepting them as truth. And the most fearful aspect of that scenario is that people would start turning these lies into truths. This would leave society weak and prone to accepting anything they see.

“The public does not like to search for correctness. They go for easy,” Porter said.

Deepfakes that circulate on platforms such as X, formerly known as Twitter, perpetuate the idea that people start to believe everything they see. Over 75% of consumers are already concerned about misinformation from AI, according to Forbes.

One popular example of deepfakes are those of political figures, such as the president of the United States.

Presidential deepfakes have been crafted for amusement, such as the videos of President Joe Biden, Donald Trump and Barack Obama roasting each other or playing video games. Clips like that are easy to distinguish, as they are humorous and outlandish — but users can also make them to deceive and not entertain.

There is technology readily available for someone to craft a realistic video of say, Biden, discussing anything the creator wishes. That potential scenario would lead to social unrest and waves of distrust throughout the country, which is very frightening to imagine.

Biden issued an executive order on Oct. 31 that addresses the growing concerns the public has on AI. The order essentially does two things: compels AI companies to allow governmental testing of their programs and asks the Department of Commerce to issue guidelines for watermarking artificially generated content. It does not, however, require AI-generated content to be labeled as such.

Biden said that, while signing the order, he came across a realistic deepfake of himself and said, “when the hell did I say that?”

If AI can fool the president, what’s to say it won’t fool you?

People should remain educated on AI's capabilities so as to not be fooled by its misuse. And the best way to do that is by continuously updating themselves on the latest and quickest developments on AI.

Ultimately, it's important to take into account both the benefits and threats artificial intelligence poses to the public when looking at it as a whole. But the current landscape of AI's integration into society has proven to bring more peril than progress.

There is a larger window of opportunity to use AI maliciously if society does not learn all of its functions, so it is crucial that people start learning now.